|

Project lead:

Funding: |

Resources: |

Brief description of the project

In all domains of science, visualization is essential for deriving meaning from data. In web archiving, data is linked data that may be visualized as graph with web resources as nodes and outlinks as edges. This proposal is for developing the core functionality of a scalable link visualization environment and documenting potential research use cases within the domain of web archiving for future development.

While tools such as Gephi exist for visualizing linked data, they lack the ability to operate on data that goes beyond the typical capacity of a standalone computing device. The main objective of the project was that the link visualization environment would operate on data kept in a remote data store, enabling it to scale up to the magnitude of a web archive with tens of billions of web resources. With the data store as separate component accessed through the network, data may be largely expanded on a server or cloud infrastructure and may also be updated live as the web archive grows. As such, this may be thought of as Google Maps for web archives.

The project is broken down into 3 components: link service where linked data is stored, link indexer for extracting outlink information from the web archive and inserting it into the link service, and link visualizer for rendering and navigating linked data retrieved through the link service.

The Bibliotheca Alexandrina led design and development. The National Library of New Zealand facilitated collecting feedback from IIPC institutions and researchers through the IIPC Research Working Group.

Goals, outcomes and, deliverables

The following summarizes project outputs:

- link-serv – versioned graph data service

- link-indexer – linked data collection tool

- link-viz – web frontend for temporal graph rendering and exploration

- Research use cases for web archive graph visualization

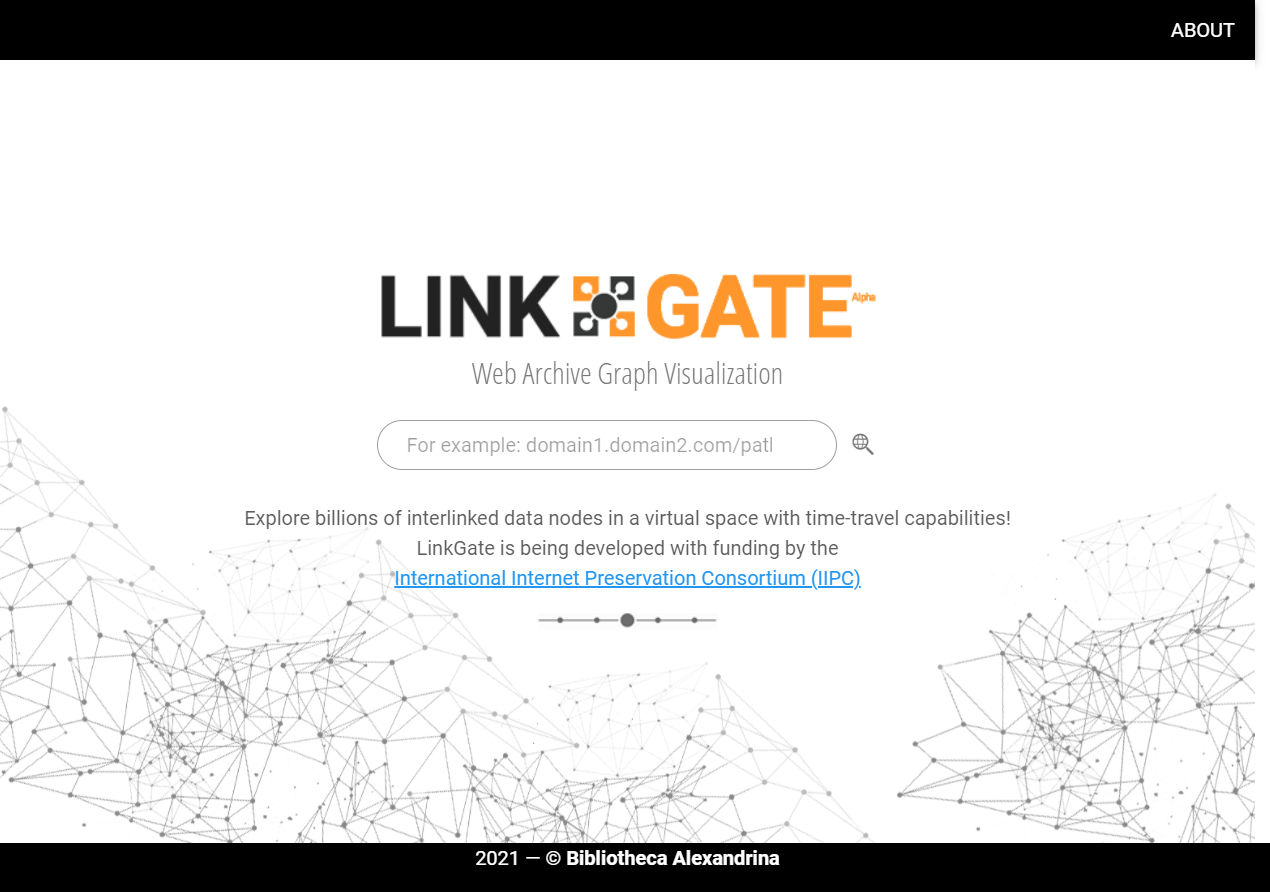

- LinkGate.bibalex – LinkGate deployment with sample data

Source code for link-serv, link-indexer, and link-viz is licensed under the GNU General Public License, version 3, and available on GitHub, each in its own repository. The documented research use cases are available on GitHub on the wiki of the project’s main repository. Deployment automation code is also available in the project’s main repository:

https://github.com/arcalex/linkgate

How the project furthers the IIPC strategic plan

The proposed link visualization environment sought to further the IIPC mission to “make accessible knowledge and information [acquired] from the Internet” by offering an alternative access paradigm where data is represented as nodes and edges on a graph, which would complement the traditional web archive access paradigm where data is represented as entries on a calendar. The proposed alternative access paradigm sought to eventually empower researchers with a tool for visually examining the interlinking among resources in a web archive and search for patterns that may lead to certain conclusions.

Being a development project, the proposed effort aimed to contribute to the IIPC goal to “foster the development and use of common tools” and is in line with the Tools Development actions of the IIPC Strategic Plan. Specifically, the general architecture of the project providing for 3 separate components that work together to deliver the link visualization environment, providing for an API for the link-serv component for interoperability, and design of a schema for representing temporal linked data in the data store were all consistent with the Tools Development short-term action to “foster a rich and interoperable tool environment based on modular pieces of software and a consensus set of APIs for the whole web archiving chain.” To “encourage use case-driven development” (also short-term Tools Development action), the project also includes the collection of “Research Use Cases for Web Archive Visualization” to supply a backlog of features for future development that builds upon the core functionality.

Detailed description of the project (project proposal)

The link visualization environment intends to be a web archive access tool geared towards research use cases.

The project is architectured around 3 components:

- link-serv

- link-indexer

- link-viz

link-serv is a service built on top of a noSQL data store capable of holding tens of billions of interlinked nodes that represent web resources and outlinks. The service exposes an API for inserting and retrieving link information.

At its core, the API looks as follows:

| API | Description | Parameters | Return |

| put | Insert web resource (node) and list of outlinks (edges) |

|

|

| get | Retrieve graph structure starting at given web resource (node) |

|

Nested outlinks up to depth as JSON |

In development phases beyond this proposal, additional, more complex retrieval methods will need to be defined in order to implement features based on the use cases to be identified.

Candidate technology for implementing the link-serv API is Node.js.

The primary challenge in implementing link-serv lies in implementing a robust store for linked data capable of scaling up. Part of the initial effort within this project is to go towards investigating noSQL alternatives and adapting the chosen data store to the project’s needs. Another challenge is to design an appropriate data schema for representing temporal web data in a graph data store.

The Bibliotheca Alexandrina has experience developing big data applications through its contribution to the development of the Encyclopedia of Life project in collaboration with the Smithsonian Institute.

Candidate technology for implementing the link-serv graph data store includes Neo4j, MongoDB, and Cassandra.

link-indexer is a command-line tool that operates on WAT files to extract outlink information and feed the extracted information to link-serv through the put API.

WAT (Web Archive Transformation) files encapsulate metadata from WARC files. The archive-metadata-extractor tool may be used to produce WAT files from WARC or legacy ARC files.

To allow for live updating of the link-serv graph data store with data from a running web crawl, the web crawler, where applicable, may be made to write WAT files in addition to WARC files, or a scheduled process may be set to check for new WARC files on disk and then trigger archive-metadata-extractor.

As link-indexer is intended to run on a lot of data, it needs to be optimized for performance. It also needs to have command-line syntax that makes it easy to execute in batch in distributed environments via automation tools.

Possible issues to consider while implementing link-indexer may include how to interpret outlink information, such as whether to treat duplicate outlinks coming from the same web resource as one, and whether to attach a repetition count to duplicate outlinks.

The Bibliotheca Alexandrina has experience working with WARC files and has in the past developed WARCrefs, a set of tools for post-crawl web archive deduplication.

Candidate technology for implementing the link-indexer command-line tool is Python.

link-viz is a web-based application that implements the user interface to the link visualization environment. link-viz user interface elements include:

- URL input field for specifying a web resource (node) to jump to in the graph

- Time slider for selecting the time instance for data retrieved from the web archive

- Graph area where the graph is rendered

- Zoom level selector for selecting how much graph data to render based on depth away from the URL in focus

In future development phases of the project, the following additional elements would be introduced:

- Tools (Finders), plug-ins for exploring the graph, e.g., PathFinder for finding all paths from one node to another given a certain depth and LoopFinder for finding all loops that go through a node given a certain depth

- Vizors, plug-ins for enriching the environment with additional data, e.g., GeoVizor for superimposing nodes on a map of the world based on geographic location

The visualizer has to render the graph such as to avoid cluttering, especially as the graph becomes bigger, all while delivering a smooth navigational experience.

Candidate technologies for drawing the graph include D3.js, Sigma.js, and vis.js.

The Bibliotheca Alexandrina has extensive experience in computer graphics and visualization, including the development of the Virtual Museum Framework (VirMuF) as part of the VI-SEEM project under European Commission funding. In the domain of web archive visualization, the BA developed in 2016 the Crawl Log Animator, an application for visualizing a crawl log as dynamic graph.

While the Crawl Log Animator is related to the idea proposed herein, the two are different in that:

- the former operates on a crawl log, while the latter on a full web archive

- the former will always draw a tree, while the latter may draw loops

- the former is a monolithic piece of software, while the latter is modular

- the former has no interactive features, while the latter aims to be navigatable and explorable via plug-in finders and vizors

To efficiently handle rendering the graph as the large dataset is navigated, link-viz will incorporate the following techniques:

- Force-directed spring model. Spring-like attractive forces are assigned among the set of edges, and the set of nodes of a graph drawing to attract pairs of endpoints of the graph’s edges towards each other, while simultaneously repulsive forces like those of electrically charged particles are used to separate all pairs of nodes. In equilibrium, edges tend to have uniform length, and nodes that are not connected by an edge tend to be drawn further apart, resulting in a visually pleasing graph where nodes and edges never overlap or occlude each other. (See “Force-directed graph drawing” on Wikipedia.)

- Interactivity. Users can manually move and reorganize nodes and entire subtrees in the 2D space to their liking, while entire graph responds in real time according to applied forces to prevent overlapping.

- Collapsible nodes. Users can collapse entire subtrees into one single parent node, which can be used to reveal details only when needed while making the rest of the graph clean and tidy elsewhere. Visual cues will be used for collapsed nodes to make graph total size imaginable even when collapsed. For example, node size grows as it has more children collapsed beneath, and node color is used to represent how deep the collapsed subtree is.

- Filtering. Filtering options will be available to limit the amount of nodes simultaneously displayed to what’s important for the user.

- Reveal detail only on demand. Graph details such as edges labels and long node captions can be toggled for the entire graph or subsets of it to reduce the number of pixels used whenever needed, resulting in cleaner, easier to understand graphs

The following is a mockup of the visualization environment.

Interoperability with other tools: An API for streaming graph data is defined by a plugin for the Gephi graph visualization software. The Gephi graph streaming plugin allows one instance of the application to render graph data streamed from another instance of the application. While this is useful for visualizing data stored remotely, it remains limited to how much data a Gephi instance may handle, which, according to the Gephi website, is 100,000 nodes (also checked in testing). If a scalable remote data store that supports the Gephi graph streaming API were to be implemented, existing users of Gephi would be able to access larger datasets. Link-serv intends to maintain compatibility with the Gephi graph streaming API to allow for the choice to use Gephi as an alternative to the link-viz visualization frontend.

link-viz as a visualization frontend intends to come with the benefits of efficiently handling large datasets by loading and unloading subsets of interest in the dataset as it is navigated, similar to how a maps application handles map data the user is interested in at a given moment despite having access to a lot more data in its remote data store. It will also have the benefit of being web based, which gives it wider reach and allows for possibly adding collaborative multi-user features in the future.

Demonstration: The visualization environment is to be deployed for demonstration purposes on Bibliotheca Alexandrina infrastructure already in existence.

Research Use Cases for Web Archive Visualization

Being a tool intended for use by researchers, future development beyond the core functionality described in this proposal is to be driven by use cases collected during this phase of the project.

The National Library of New Zealand plans for the following key activities:

- Develop a use case template (and examples) with specific focus on visualizations for web-based material

- During the first 6 months of the project run events (webinars etc) for IIPC members and other interested parties to test the template and begin collecting potential use cases

- After the first 6 months of the project, use a version of the new tool (proof-of-concept or prototype) to more formally work with the IIPC community to formalize use cases for future development

- Collate full set of use cases and requirements documentation.

The National Library of New Zealand has extensive experience leading working groups and working collaboratively with other institutions to develop community resources and tools such as PREMIS, METS, the Preservation Storage Criteria, the Web Curator Tool, and the NZ Metadata Extraction Tool. In addition, NLNZ has been actively involved in efforts to define researcher requirements for working with web archives, including hiring a Digital Research Coordinator to conduct interviews and surveys to understand researcher needs.

Project schedule of completion

| Milestone | Description | Date of Completion | Lead Partner |

| 1 | Identify technology for link-serv graph data store; perform testing; design data schema for temporal linked data | 2020-02-29 | Bibliotheca Alexandrina |

| 2 | Develop a use case template (and examples) with specific focus on visualizations for web-based material | 2020-02-29 | National Library of New Zealand |

| 3 | Develop prototype link-serv core API and tie into data store | 2020-04-30 | Bibliotheca Alexandrina |

| 4 | Develop prototype link-indexer outlink extraction from WAT files and insertion into link-serv | 2020-04-30 | Bibliotheca Alexandrina |

| 5 | Develop prototype link-viz graph rendering, URLnavigation, time control, and depth control | 2020-04-30 | Bibliotheca Alexandrina |

| 6 | At WAC2020, demo an early prototype to gather feedback to incorporate into the rest of the project | 2020-05-13 | Bibliotheca Alexandrina and National Library of New Zealand |

| 7 | Deploy demo on Bibliotheca Alexandrina infrastructure with small dataset | 2020-06-30 | Bibliotheca Alexandrina |

| 8 | Run events (webinars etc.), for IIPC members and other interested parties to test the template and begin collecting potential use cases | 2020-07-31 | National Library of New Zealand |

| 9 | Refine link-serv, link-indexer, and link-viz, taking into account feedback from deployed demo of first prototype | 2021-02-15 | Bibliotheca Alexandrina |

| 10 | Load large dataset into deployed demo | 2021-02-15 | Bibliotheca Alexandrina |

| 11 | Use deployed demo of prototype to work with IIPC research community to formalize use cases; collate set of use cases and requirements documentation | 2021-03-31 | National Library of New Zealand |

| 12 | Finalize project technical documentation | 2021-03-31 | Bibliotheca Alexandrina |